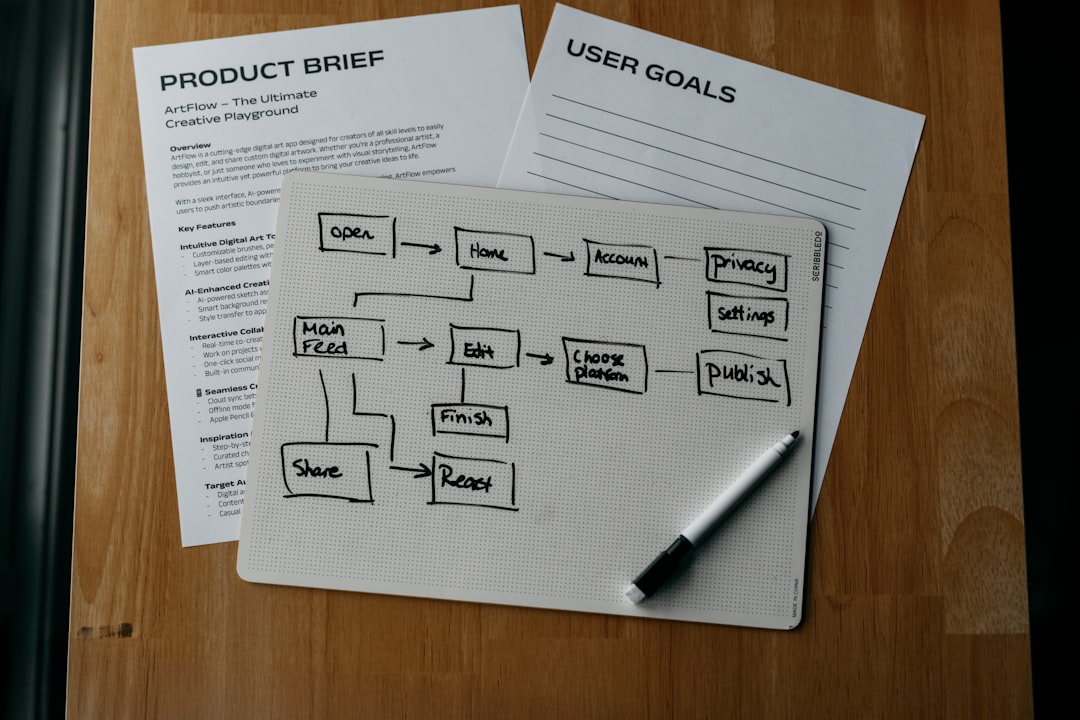

Imagine you walk into a giant clothing store. There are sections for men, women, kids. You can also sort by color, size, brand, or type: jeans, shirts, jackets. Now think about searching that store online. That’s faceted navigation — filters that help users find exactly what they want.

But here’s the twist: search engines can get very confused by these filters. They can end up indexing hundreds, even thousands of similar pages. That creates a big problem of duplicate content.

So how do we keep faceted navigation helpful for users but safe for SEO?

What is Faceted Navigation?

Faceted navigation lets users filter products or content by various attributes. It’s great for user experience.

For example, on a shoe store site, you might see:

- Brand: Nike, Adidas, Puma

- Size: 7, 8, 9, 10

- Color: Black, White, Red

When users click one or more filters, the site usually changes the URL. Something like:

example.com/shoes?brand=nike&color=black&size=10

This creates new combinations of the same basic content.

Why Duplicate Content Matters

Search engines like Google aim to give users the best pages. If your faceted filters create multiple versions of the same content, it confuses the bots. They don’t know which page to show in results.

This can cause:

- Index bloat: Search engines may crawl and index tons of similar pages, wasting your crawl budget.

- Keyword dilution: Several URLs compete with each other for the same keywords.

- Poor rankings: Google might pick the wrong page to rank — or rank none at all.

But don’t worry — we’ve got ways to fix it without removing filters that users love.

Tips to Manage Faceted Navigation Effectively

Here’s how you make both users and search engines happy.

1. Use Canonical Tags

Canonical tags tell search engines which version of a page is the master (preferred) version.

Even if users land on filtered pages, the canonical tag should point to the main category page.

Example:

<link rel=”canonical” href=”https://example.com/shoes” />

This helps consolidate SEO signals and avoid duplicates.

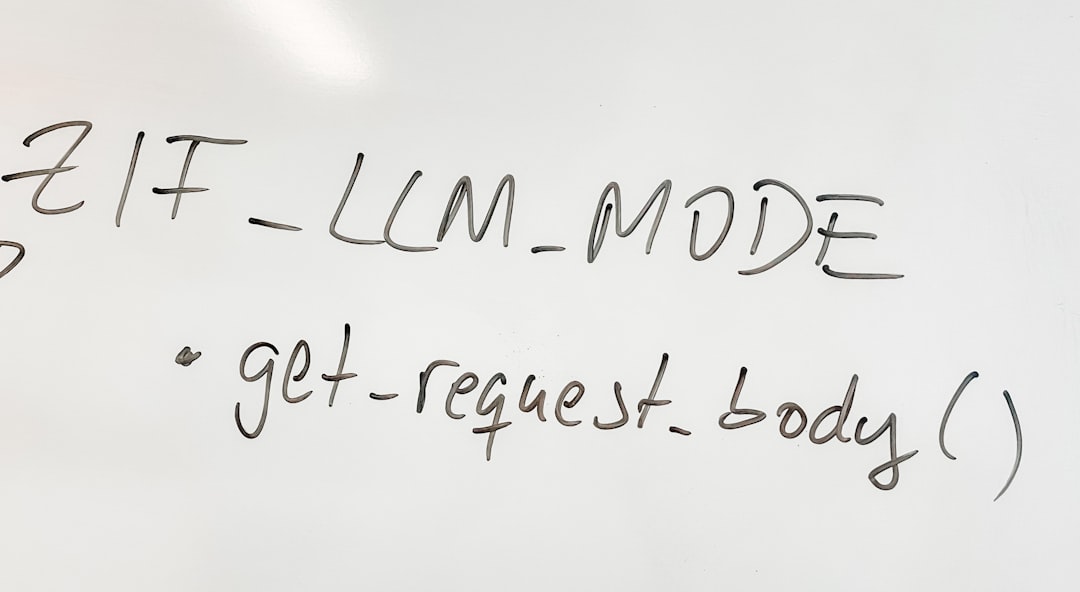

2. Block Crawling of Filtered URLs Using Robots.txt

Stop search engines from crawling certain parameters or folders.

Example:

User-agent: *

Disallow: /shoes?*

This works only to stop crawling, not indexing. So you should combine it with other measures.

3. Add Noindex Meta Tags

If some filtered pages must be accessible but shouldn’t appear in search results, use:

<meta name=”robots” content=”noindex, follow”>

This keeps those pages off search engines but lets link equity pass through.

4. Limit the Number of Combinations

Not all filter combinations are useful. Avoid creating URLs for every possibility.

Maybe a few filters like brand and gender are important. The rest? Keep them out of URL paths.

Pro tip: Use JavaScript or AJAX to apply some filters without changing the URL.

5. Use Parameter Handling in Google Search Console

In Google Search Console, you can tell Google what each parameter does.

For example:

- color: doesn’t change the content

- sort: just changes order, not content

This helps Google understand what to crawl and what to skip.

6. Consider Pagination Carefully

Filtered pages often have pagination, like page 1, page 2, and so on. These can also create duplicate content.

Use rel=”next” and rel=”prev” tags, though Google may treat them differently now. Test how your site handles it.

When You Should Allow Filtered Pages to Be Indexed

Not all filtered content is bad. Sometimes, a filtered page targets long-tail keywords that have search volume.

Example:

- “Red Nike running shoes for men”

- “Size 10 white Adidas sneakers”

If those pages:

- Have unique, optimized titles

- Have unique meta descriptions

- Offer real value and search intent

… then consider letting them be indexed. Just don’t allow everything by default.

How to Make Those Filtered Pages SEO-Friendly

- Make sure content is not thin or duplicated.

- Add breadcrumbs to help navigation.

- Write custom H1s and titles that describe the filter combo.

Think of these pages like mini landing pages. High-quality, focused, and useful.

Do’s and Don’ts

Here’s a quick cheat sheet:

- Do: Use canonical tags smartly

- Do: Block useless parameters from crawling

- Do: Test and monitor in Google Search Console

- Don’t: Let every filter create a new indexable URL

- Don’t: Rely only on robots.txt (it won’t stop indexing!)

- Don’t: Forget the user — make sure filters are fast and friendly

Testing, Monitoring, and Staying Flexible

SEO for large sites is never set-it-and-forget-it. Monitor:

- Crawl stats in Search Console

- Indexed pages by doing “site:yourdomain.com” searches

- Traffic and ranking of key filtered URLs

And be willing to adjust. Your product catalog will grow. Search engines will update algorithms. You’ll need to stay on top of it.

Wrapping Up with a Few Fun Ideas

Faceted navigation doesn’t have to be scary. It just needs some thoughtful strategies.

It’s like training a puppy. Set boundaries (robots.txt), teach what’s important (canonical), and reward good behavior (high-quality filtered pages).

Keep it flexible, user-friendly, and data-driven.

With the right setup, you can keep users — and Google — happy.

Let the filters roll!